GPT-5 is live in ChatGPT and the API. It’s stronger on coding, math, and multimodal tasks, and it gives you new controls for reasoning and verbosity. GPT-4.1 still has the largest context window. Use GPT-5 for agents, code edits, and tool chains; use 4.1 when you truly need million‑token single shots.

| Model | Input | Cached input | Output |

| GPT-5 | $1.25 | $0.125 | $10.00 |

| GPT-5 mini | $0.25 | $0.025 | $2.00 |

| GPT-5 nano | $0.05 | $0.005 | $0.40 |

| Model | Context window | Max output tokens | Notes |

| GPT-5 | 400,000 | 128,000 | Listed on GPT-5 product/API pages |

| GPT-5 mini | 400,000 | 128,000 | Same as above |

| GPT-5 nano | 400,000 | 128,000 | Same as above |

| GPT-4.1 | ~1,047,576 | See docs | Largest published window in OpenAI’s lineup |

ChatGPT now adjusts how much it “thinks” based on the task. It can give shorter answers when that’s better and expand when the task is complex. You can select the heavier thinking mode if you need it. Rollout is staged: Team now, Enterprise/Edu next.

Set reasoning_effort to minimal, medium, or high. Minimal keeps responses quick for straightforward tasks. Use high for complex logic, refactors, or long tool chains.

Control how terse the answer is with verbosity. Low for short actions and logs. High for detailed write-ups.

Instead of forcing JSON for every tool call, custom tools can accept plaintext (optionally constrained by a grammar). This reduces escaping issues with long strings and code blocks and improves multi-step agent reliability.

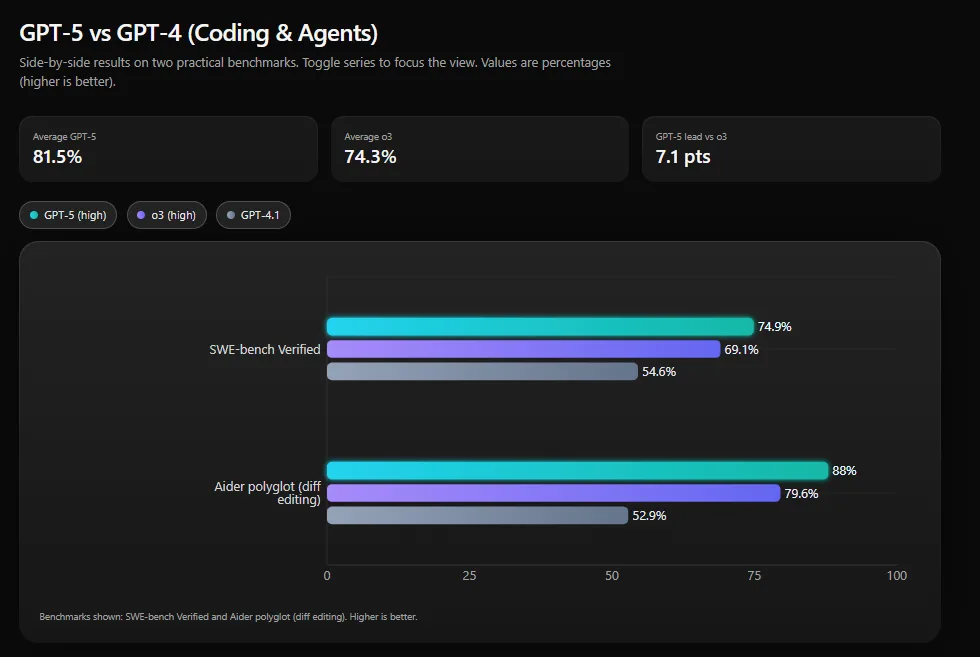

| Benchmark | GPT-5 (high) | o3 (high) | GPT-4.1 |

| SWE-bench Verified | 74.9% | 69.1% | 54.6% |

| Aider polyglot (diff editing) | 88.0% | 79.6% | 52.9% |

OpenAI also reports about 22% fewer output tokens and 45% fewer tool calls versus o3 at high effort on the same SWE-bench setup. That usually means fewer retries, quicker runs, and lower cost at similar or better quality.

| Benchmark | GPT-5 (high) | o3 (high) | GPT-4.1 |

| AIME ’25 (no tools) | 94.6% | 88.9% | — |

| GPQA Diamond (no tools) | 85.7% | 83.3% | 66.3% |

| HMMT 2025 (no tools) | 93.3% | 81.7% | 28.9% |

| MMMU (vision+text) | 84.2% | 82.9% | 74.8% |

These tests track structured reasoning, science knowledge, and visual understanding. If you work with charts, dashboards, or diagrams, you will likely notice the improvement.

If your pipeline depends on processing a huge codebase or many long PDFs in a single prompt, GPT-4.1’s ~1,047,576-token window remains the top option. Retrieval can help with GPT-5, but if your app is built around one massive input, test before switching.

For very large, simple jobs like tagging or brief summaries, gpt-5-mini or gpt-5-nano may beat both 4.x and full GPT-5 on price. Benchmark them on your own data.

Example: support automation. Average ticket uses about 800 input tokens and 250 output tokens. You handle 100,000 tickets per month.

Plan: route 85% of tickets to gpt-5-nano and 15% to full gpt-5. Prices per 1M tokens: nano $0.05 in / $0.40 out; gpt-5 $1.25 in / $10 out.

| Load | Token math | Approx. cost |

| Nano (85,000 tickets) | 85k × (0.8k + 0.25k) = 89.25M | Input: 68M × $0.05/M = $3.40 Output: 21.25M × $0.40/M = $8.50 Total ≈ $11.90 |

| GPT-5 (15,000 tickets) | 15k × (0.8k + 0.25k) = 15.75M | Input: 12M × $1.25/M = $15.00 Output: 3.75M × $10/M = $37.50 Total ≈ $52.50 |

| Total | — | ≈ $64.40 (before caching) |

Routing easy tickets to nano and hard ones to full GPT-5 can cut spend while improving quality, because GPT-5 tends to use fewer tokens and fewer tool calls on complex tasks.

| Area | GPT-5 | GPT-4.1 |

| Built-in “thinking” | Automatic in ChatGPT; reasoning_effort in API (incl. minimal) | Older approach; no minimal setting |

| Output length control | verbosity (low/medium/high) | Not available |

| Tool calling | Custom tools with plaintext + grammar; parallel calls | JSON functions; more fragile with long strings |

| Context window | 400K | ~1,047,576 |

| Typical use | Agents, coding, mixed tool chains | Giant single-shot inputs |

| Pricing (in/out) | $1.25 / $10 | Often $2 / $8 in docs |

If you do many code edits across files and repos, or run long chains that call multiple tools, you’ll likely see fewer failures and cleaner diffs with GPT-5.

If you read charts, dashboards, or diagrams, GPT-5’s multimodal gains help with parsing and summarizing visuals with fewer mistakes.

Scores are better, but you still need human review. Treat GPT-5 as a stronger first draft, not a final decision-maker.

OpenAI’s own “new era of work” post claims 5M paid business users and ~700M weekly users across ChatGPT. That’s not a lab score, but it does mean partner tools and third-party platforms will move fast to GPT-5 defaults. You’ll see it in Copilot and other Microsoft products too.

GPT-5 can “think deeper” when needed, but that costs time and tokens. In ChatGPT the app decides; in the API you choose with reasoning_effort. Set a default (minimal) and only raise it for tricky logic or long tool chains. Also plan for rate limits. Always keep a fallback path to mini/nano so your app doesn’t stall during spikes.

If your system prompt or knowledge pack is long, turn on caching. The model bills cached input at a lower rate, which adds up fast for high-traffic apps. Cache anything reused across requests: role instructions, policy text, schema examples.

Custom tools let you send plaintext instead of strict JSON. Add a simple grammar so outputs stay valid. This avoids the usual escaping mess with code blocks, reduces retries, and shortens runs.

Even with a large window, dumping whole PDFs or repos is wasteful. Use retrieval: store chunks, fetch only what’s relevant, and keep the prompt tight. You’ll get steadier answers and lower bills.

Most complaints about “too long” or “too short” drafts are solved by setting verbosity. Decide per task: low for actions and logs, high for reviews and reports. Lock it in your router so writers don’t need to fix tone after the fact.

Keep human review in the loop for legal, finance, or health. Store prompts and outputs safely. Turn off training on your data where required, and log tool actions so you can audit what the agent did and why.

| Goal | What we set up | Result |

| Cut spend without losing quality | Router rules + caching for long system text | Fewer tokens on easy work; lower bill |

| Fewer broken runs | Plaintext tool calls with grammar + retries only on real failures | Less flakiness; cleaner traces |

| Faster code fixes | Diff-based edit prompts + repo guard checks | More PRs that pass on first try |

| Safer outputs | Policy prompts + sampling + human review for flagged items | Issues caught before going live |

| Clear reporting | Metrics on tokens, time, errors, success by task | Decisions based on data, not opinions |

If your app relies on single huge inputs, keep GPT-4.1 or test GPT-5 with retrieval before moving.

If accuracy is already good enough, mini or nano may be your best spend. Benchmark on your own data.